Company attributes

Other attributes

Meta was founded as Facebook Inc. in 2004 by Mark Zuckerberg, Andrew McCollum, Chris Hughes, Eduardo Saverin, Rob Jagnow, Dustin Moskovitz, and Nicholas Daniel-Richards while studying at Harvard. It is a social networking platform that enables users to connect through sharing pictures or written text and running ads campaigns.

Before founding Facebook, Mark Zuckerberg created and published a website called FaceMash in October 2003 for rating the attractiveness of students at Harvard. FaceMash quickly gained popularity reaching approximately 22,000 photo views within a few days of its launch before being shutdown. Zuckerberg was summoned to the Harvard Administrative Board and threatened to be expelled from Harvard for creating FaceMesh because he did not have permission to use student photos. The Harvard Administrative Board made the decision to let Zuckerberg continue his education at Harvard, and Zuckerberg went on to create Thefacebook in February 2004 with some of his fellow students at Harvard— Andrew McCollum, Chris Hughes, Dustin Moskovitz, Eduardo Saverin, and Nicholas Daniel-Richards.

Thefacebook was the name of the company until August 23, 2005, when the company purchased facebook.com for $200,000 and changed its name to Facebook. Other domains purchased by Facebook include FB.com for $8.5 million in November 2010 from the The American Farm Bureau Federation to give Facebook employees FB.com email addresses. The deal for FB.com also included The American Farm Bureau Federation relinquishing their rights to use FB.org.

On the 28th of October, 2021, Facebook rebranded as Meta. Facebook, WhatsApp, and Instagram will retain their names but the company that produces and maintains them is now named Meta. The name was selected to echo the major product that Zuckerberg hopes Facebook—now Meta—will be represented by: the metaverse, which is the name of a shared online 3D virtual space that different companies are interested in developing as a kind of future version of the internet.

On April 9, 2012 Facebook acquired Instagram, a social media company focused on sharing user photos, for $1 billion in a combination of Facebook stock and cash. At the time Facebook acquired Instagram, Instagram had approximately 30 million active users making Instagram the largest purchase ever made by Facebook in terms of both active audience size and price. Mark Zuckerberg, the CEO and co-founder of Facebook, made the following statement regarding the company's decision to purchase Instagram:

We don't plan on doing many more of these, if any at all, but providing the best photo sharing experience is one reason why so many people love Facebook and we knew it would be worth bringing these two companies together.

In February 2014, Facebook acquired WhatsApp, a company offering a social messaging platform, for $19 billion. Facebook made the acquisition as a defensive investment after analyzing WhatsApp data and determining WhatsApp's ability to compete with Facebook. Facebook awarded 177.8 million shares of Facebook of Class A common stock, $45.9 billion in cash to the shareholders of WhatApp, and 45.9 million restricted stock units the the employees of WhatsApp. At the time of acquiring WhatsApp, the WhatsApp platform had approximately 450 million users, was gaining about 1 million users every day, and according to OnDevice Research was the world's most popular smartphone messaging application.

In July 2014, Facebook announced acquiring Oculus, a company making virtual reality products, for $2 billion. Facebook paid $400 million in cash and approximately $1.6 billion in Facebook stock. Facebook also announced offering an additional $300 million in stock and cash if certain milestones are met by the company post-acquisition as performance incentives. The $2 billion acquisition price of Oculus is based on a trading price of Facebook stock at $69.35 per share. Mark Zuckerberg, the CEO and co-founder of Facebook, made the following comments regarding Facebook's decision to acquire Oculus and Facebook's plan for the company:

We’re going to focus on helping Oculus build out their product and develop partnerships to support more games. Oculus will continue operating independently within Facebook to achieve this.

Facebook Reality Labs is a division of Facebook focused on research, development, and manufacturing the technology and products in virtual reality (VR) and augmented reality (AR). In 2020, Oculus and Facebook's AR/VR team were renamed as Facebook Reality Labs in an effort to streamline the company's research and product development into augmented and virtual reality systems, be they software or hardware systems, in a single division. Previously, Facebook's efforts into VR and AR were the Facebook AR/VR, Spark AR, and Oculus Research. The latter name was adopted from Facebook's acquisition of Oculus. Part of the name change was to increase clarity into the division's connection to Facebook and the division's mandate to develop AR and VR technologies. Facebook's Portal division, which focused on video conferencing smart screen devices, was also folded into the larger Facebook Reality Labs division.

With the renewed focus of Facebook on augmented and virtual reality with their Facebook Reality Labs, there are nearly 10,000 employees in the division, which accounts for almost a fifth of the entire worldwide Facebook workforce. In 2017, the Oculus VR division, which was included in Facebook Reality Labs, was approximately a thousand employees or more—around 5 percent of the Facebook's overall employee count. The shift of employees into Facebook Reality Labs suggests Facebook's interest in the virtual and augmented technologies. In an interview with The Information on Facebook's virtual and augmented reality ambitions, Facebook CEO Mark Zuckerberg said:

Most of what Facebook does is... we're building on top of other people's platforms. I think it really makes sense for us to invest deeply to help shape what I think is going to be the next major computing platform, this combination of augmented and virtual reality, to make sure that it develops in this way that is fundamentally about people being present with each other and coming together.

Example of a wireless Oculus Quest VR headset.

Facebook has already shifted their VR focus away from virtual reality headsets tethered to a computer to the company's Oculus Quest and Oculus Quest 2, which are standalone wireless devices that do not require a computer. The Quest 2 saw five times as many preorders as the predecessor and developers saw a boost in sales of existing titles.

Beyond the virtual and augmented reality technologies used for playing video games, Facebook Reality Labs is also developing products and technology to bring the physical and virtual worlds together to create innovations the division believes will change the way people utilize their computers and engage with each other in a way similar to smartphones. This includes wearable consumer technology, enabling augmented and virtual reality technology not currently available. This also includes improvements to AR glasses and VR headsets, computer vision, audio, and graphics. Facebook Reality Labs says they are working toward technologies that include brain-computer interfaces, haptic interaction, eye/hand/face/body tracking, perception science, and telepresence.

Building on the success of Facebook's Oculus virtual reality headsets, the most basic work of Facebook Reality Labs is the development of VR hardware. This includes three different hardware categories. The first is PC VR, which uses graphics cards and the computer power of a laptop or computer tower. These virtual reality headsets are suggested to offer the most immersive virtual reality experience with tracked touch controllers and six degrees of freedom. The second is an all-in-one VR. These standalone headsets are in mobile form and deliver untethered virtual reality experiences without the need for a computer. These headsets also represent the most accessible form of the virtual reality headsets. The third type of virtual reality headset is Mobile VR, which uses the screen of a smartphone as an entry point into virtual reality without the price-point or power need of the other headsets.

Facebook Reality Labs developed a Liquid Crystal Research Award in order to support academic work in the areas of optics and displays for AR and VR. This includes six research awards, which include one Diamond Award of $5000, two Platinum Awards of $3500, and three Gold Awards of $2500.

The overall goal of the research award is to develop advanced liquid crystal optic and display technologies and to devise a way to integrate those technologies within existing and emerging AR and VR optical and display solutions. This is to help Facebook Reality Labs develop a better user experience, and help develop new designs in the AR and VR optical design space. The purpose of the research award is to help encourage researchers in liquid crystal and cross-disciplinary fields to engage in and develop research into optic display technologies.

Facebook Reality Labs is developing new VR glasses, which are designed to be as small and comfortable as a normal pair of glasses rather than the bulky VR headsets developed under the Oculus name. In a VR headset, to get the light to focus from the display into the wearer's eyes, there needs to be a distance between the macro display and lens, which is where the size of VR headsets is generated. Some solutions exist to shorten the distance to make smaller headsets, but these require new lenses and displays.

Prototype of the FRL virtual reality glasses.

The projected solution for developing a smaller VR headset, or glasses, has been through a combination of holographic lenses and polarization-based optical 'folding' to create functional VR glasses at 9mm thickness. The overall goal for the glasses is to develop slim-glasses like VR headsets with a wide field of view and retinal resolution.

FRL's approach to developing VR 'glasses'.

Folded optics are an important part of shortening the distance between the display and the wearer's eyes. This allows the light to travel the same distance, but the path is condensed along a folded path provided by the polarization. This shrinks the distance so the display and lenses can become a single unit, which in turn develops the lenses into holograms.

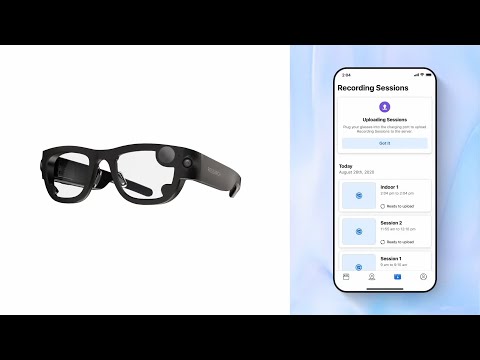

Facebook Reality Labs' Project Aria is a project into the development of augmented reality glasses. The project is working with a research device worn like traditional glasses. The purpose of the project is to understand what would be necessary, for both hardware and software, to create AR devices, and how the devices will be useful to wearers. The research device captures the wearer's audio and video and tracks their eye movements and location information.

Image of the Project Aria research glasses.

The ambitions for Project Aria are to develop AR glasses that would help a wearer perform basic everyday tasks. This could include finding a wearer's keys, navigating a new city, or capturing a moment. Facebook Reality Labs also envisages the capability of using the glasses to call a friend and seeing them as if they were in the wearer's environment. Or the glasses could offer a digital assistant, capable of detecting road hazards, offering important statistics or metrics during a meeting, or helping the wearer hear better in a noisy environment. Facebook Reality Labs suggests in order to develop such AR technology would require enhanced visual and audio technologies, contextualized artificial intelligence, and the ability to house the technology in a lightweight frame.

Project Aria, and the development of AR glasses, offers new opportunities for advertising, beyond the uses of AR utilized for trying on clothes or seeing furniture in a room. By having wearers using AR glasses, Facebook Reality Labs expects the glasses to offer contextual and relevant information, and possibly a layer of virtual information unique to the wearer. It will also offer opportunities for companies to advertise in unique perspectives and environments, and target those advertisements to wearers with the contextual and personal data generated through the wearers' activities.

On the other side of advertising, the glasses' contextual information could offer wearers different visual layers, depending on what the wearer is using the glasses for; this in turn could dictate the level of immersion of those layers. For example, a person in a virtual working environment could have layers with notes or decorations for their virtual working environment, which could allow a wearer to "turn off" the virtual work area at the end of a work day, allowing further immersion in a virtual workspace and another layer of separation.

Visualization of contextual layers in AR.

Part of Facebook Reality Labs developing these possibilities into their AR glasses is to develop a semantics engine in augmented reality. This would be similar to the human ability to understand a room based on what is movable and what is fixed, and what each element of a room is. The ability for augmented reality engines to understand these environments allows developers to create content for those specific places and contexts. This contextual knowledge could influence the way content for larger environments, such as a city, are created and how they translate in culturally diverse contexts.

Scene reconstruction from Facebook Reality Labs LiveMaps.

An application of Project Area, LiveMaps uses computer vision to construct a virtual representation of the parts of the world relevant to a wearer. These maps will allow the AR glasses to see, analyze, and understand the environment they are in. This could also allow the device to track changes in the environment and update them in real time. The computing and hardware power needed to perform these operations on a pair of AR glasses would be more than Facebook Reality Labs thinks the glasses would be able to handle, and LiveMaps creates a cloud-environment the glasses would be able to tap into.

LiveMaps is part of a Cloud AR project to make AR persistent and anchored to precise locations in the physical world. The development of Cloud AR requires precise 3D mapping of large areas and can enable AR experiences to exist in places. This could include a shop with a virtual layer of information about its products, or a map with traffic information.

Image from a patent for Facebook's planned AR glasses.

On what is described as the next step on Facebook Reality Labs' development of Project Aria and augmented reality glasses, Facebook is expected to launch smart glasses in 2021, which are expected to be similar to Snapchat's Spectacles, which were released in 2016 and were a failure. The company has provided little detail with the smart glasses capabilities, except to say they will not have a display. The device is being developed in partnership with Luxottica and is expected to carry Ray-Ban branding. Facebook has said the finished AR products will have a functioning display.

The partnership is to develop an Augmented Reality Developer Challenge aimed at creating AR applications and services and developing smart communities. The challenge includes community-driven competition focused on problems in healthcare, education, and workforce development. The award offers $20,000 in cash and community support towards a total investment in $40,000.

Facebook Reality Labs is also developing the interface necessary for AR glasses. Part of developing the user experience interface for AR systems is developed through Facebook's Oculus virtual reality systems. In these systems, Facebook Reality Labs can develop data sets from user input data and preferences. This can include preferred input methods, preferred menu types, and how users interact with their surroundings while using VR systems. This data helps a VR headset identify a wearer and identify them similar to a fingerprint. These wearer "fingerprints" can be applied to developing an adaptive and intuitive AR interface. The interface is built on two pillars:

The first pillar is ultra-low friction input, which works to create an intuitive and short path from thought to action. Developed through hand gestures or voice commands selected from a menu, actions are enabled through hand-tracking cameras, a microphone array, and eye-tracking technology. Further developments are focused on developing a natural, unobtrusive way of controlling AR glasses, and to do this Facebook Reality Labs has explored electromyography wearables that use electrical signals from the spinal cord to the hand to control the device and create frictionless input.

The second pillar of developing an AR interface is the use of artificial intelligence, context, and personalization to develop the effects of a user's input actions to serve the user's needs in a moment. This also works toward developing an interface that can adapt itself to the user, which would require AI models to develop inferences about information a wearer might need to know in various contexts. Understanding the context of the wearer will also, ideally, make an AR system more useful to the wearer while providing the wearer a level of control of the experience.

Anechoic chamber used by Facebook Reality Labs for modeling spatial sound.

Facebook Reality Labs announced an increased interest in audio presence technologies, in 2020. This is the feeling that a virtual sound has a physical presence in the same space as the listener. The aim of the Facebook Reality Labs is to develop the audio so it is indistinguishable from a real-world source. In order to develop this, the Facebook Reality Labs has studied the state of personalized spatial audio, which comes down to a data representation of how a person hears spatialized audio. This is known as head-related transfer function (HRTF). These features are already used in video games and in VR games that provide spatial accuracy for a "general" person but does not work for everyone, because, as researchers have found, the way people experience audio can be very personal on a physical level, and also a perceptual level. Facebook Reality Labs is working on a personalized HRTF for users of their VR products, based on something as simple as a photograph of a user's ears.

Image from patent filed for Facebook AR glasses showing how FRL's audio technology can be integrated into AR glasses.

Part of creating personalized spatial audio for a user also includes understanding how the sound travels through a particular space; for example, it can bounce off a surface before it reaches an ear, which can impact the way a sound may change. This is another factor Facebook Reality Labs has to consider in order to create more realistic audio presence. Understanding how sound works in a room would help the division develop audio to help a user feel as if they are in a place. Uses for this include phone calls such that users could feel as if they were in the same room, conference calls where users could call in virtually and feel as if they were in the same room, and in gaming, to create a greater immersive feeling for users playing video games with a VR headset.

Facebook Reality Labs is also developing what they call perceptual superpowers, another part of the division's research into audio. These are intended to be technological advancements that let a user hear better in a noisy environment by doing something like turning up the volume of a speaker while reducing the volume on background noises. This is achieved first through microphones on a set of AR glasses that work to understand the noises in an environment and, through tracking a wearer's movements, understand the noises a wearer is most interested in and enhance them.

Detailed look at how the assembly of FRL's audio engine would interact with the ear.

Similar to the perceptual superpowers, Facebook Reality Labs has used near-field beamforming in a pair of AR glasses with a microphone array to isolate a speaker on a phone call and remove the background noise that may drown out the speaker's voice.

Part of Facebook Reality Labs' focus on using augmented and virtual reality technologies for human connection comes from the development of avatars in a project called Codec Avatars. This project uses 3D capture technology and artificial intelligence systems developed by Facebook Reality Labs that allow people to create lifelike virtual avatars to help social connections in VR feel more natural and real. There are already very lifelike avatars, but these are often developed by an artist; Facebook Reality Labs' hope is to automate this process and decrease the time necessary for developing a lifelike avatar. While avatars have been used in video games for years, the hope of Facebook Reality Labs is that they can capture the small facial gestures that would make interactions feel more real, and make using lifelike avatars at work or in a meeting effective in a virtual space, rather than a physical space.

Outside of one of Facebook Reality Labs Codec Avatars capture studios.

In order to develop the lifelike avatars, Facebook Reality Labs has to work with complex data points. These are made up of speech, body language, and linguistic clues, among others, in order to render realistic virtual humans. In order to develop and measure accurate data points, the lab has worked to develop many ways of measuring a person and the small details in human expression. These expressions are measured through an encoder and a decoder. The encoder uses cameras and microphones on a headset to capture what a subject is doing and where they are doing it. The encoder uses this information to develop a unique code, or numeric representation of the person's body. The decoder translates the code into audio and video signals the recipient sees as a representation of the sender. A larger capability would be to develop the technology to the point where a capture studio is not necessary to create an avatar, but instead a consumer can create their avatar with less data and less sophisticated camera equipment.

A person in one of the Codec Avatars capture studios.

This is where the importance of the Codec Avatars artificial intelligence engine comes in. The current recording of some of the Facebook Reality Labs' 3D studios last fifteen minutes and can have as many as 1,700 microphones to properly reconstruct sound fields for better audio. The capture system gathers 180 gigabytes of data per second, which is then turned into data used for the creation of the avatar. The artificial intelligence systems, once trained, are intended to build a realistic avatar from a few pictures or videos. One of the largest hurdles for such an artificial intelligence system is the diversity of humans.

One concern acknowledged by the Facebook Reality Labs is whether avatars will create trust issues, especially in the differentiation of real or fake events and the proper handling of the unique and personal data involved in mapping a person for an avatar. This is especially a concern with the emergence of deepfakes. The research team has suggested these issues could be mitigated through a combination of user authentication, device authentication, and hardware encryption coupled with a robust system for handling and storing data.

Part of making this technology work is what Facebook Reality Labs calls social presence. This is described, in a way, as the feeling a person has that they are in the presence of someone else, which is often missed in other video calling technologies where two people can see each other. The research team hopes the capability to increase the fine details of the avatar, add details to the avatar's mouth (especially while talking), and develop realistic eye contact between the avatars can develop that social presence and feeling that two people are together in a virtual room, but feel as if they are together in a real place.

A Facebook Reality Labs out of Sausalito is exploring a different way of developing lifelike avatars using physics, biomechanics, and neuroscience to develop an avatar that can interact in a virtual environment in a lifelike way.

Facebook Reality Labs is using technology developed by CTRL-Labs, a startup Facebook acquired in 2019, to develop a wristband that uses electromyography (EMG) to translate subtle neural signals into actions. These actions would include typing, swiping, or playing games. The bands are also expected to offer haptic feed that is more responsive than basic hand tracking. In 2021, the working prototype was capable of recognizing basic gestures that Facebook calls clicks and are supposed to be reliable and easy to execute. In theory, the neural wristband is intended to track the nerve signals to a wearer's fingers. In this way, a wearer could type on a virtual keyboard and the band could adapt to the way a user types and automatically correct common typos.

Example of a prototype game FRL expects wearers of their neural wristband will be able to play in future.

CRTL-Labs initially described the ultimate possibility for an EMG wristband to offer a user the same typing, but without any gesturing or moving of the hands, rather than just by thinking about moving your hands. This would, in turn, be another part of Facebook Reality Labs' augmented reality glasses that work to help the wearer interact with the world in new ways. Facebook says the neural wristband could operate as a brain-computer interface, given further maturation and development, but with a very different technological philosophy when compared to other brain-computer interfaces such as Neuralink.

Part of the device understanding what a wearer wishes to do, and interpreting those signals without requiring movement—an end goal for the project—requires the development of an underlying artificial intelligence system that adjusts to the wearer, begins to understand what a wearer might want to do in future, and eventually what wearer-specific gestures can come to mean.

Facebook Reality Labs suggests the wristbands would work with their AR glasses in order to create a more seamless way to interact with the real and virtual worlds. This would, the company suggests, create a different way of experiencing computing in which the user would interact with the digital world in three dimensions. The wristband is intended to be a ubiquitous input device the wearer does not need to think about, but rather one that can adapt gestures around those a wearer is familiar with.

Facebook Reality Labs has stated that the use of a wrist-based wearable is because the wrist has become a traditional place to wear technology, such as a watch. This could allow the device to fit into social contexts and be comfortable for all day wear and would reduce frictions such as those experience from other input devices such as phones, game controllers, or voice. As well, using hand-based input allows a wearer to use comfortable and intuitive interactions to direct the augmented reality. Part of the use of the wrist, rather than a more brain-centric, as an input location is described as a way to decipher between thoughts which do not become action and thoughts which become action. The wrist acts like a body-based layer between those thoughts which do not turn into actions and those signals that make it to the wrist, which are in turn interpreted as actions to be performed.

Part of using the wrist, especially when interacting with virtual or augmented reality, is that the use of haptic feedback can give the wearer the experience of touching or feeling something more readily than other neural systems may be readily able to. For example, in typing, a wrist-based system can give a subtle haptic feedback each time the system has decided a character has been typed, allowing the virtual typist to continue to the next letter without confusion, similar to the way a click or the "bottoming-out" feeling on a traditional computer keyboard works. This haptic feedback would be similar to the way current wearables can alert a wearer to a notification—another feature Facebook Reality Labs suggests these wristbands could offer—with more nuanced haptics to allow users to understand the urgency of a notification.

A bellowband prototype.

Similarly, Facebook Reality Labs is working on new types of haptic feedback systems different from the traditional rumble systems found in smart phones, game controllers, and current wearables. Bellowband, one prototype system, uses a lightweight and soft wristband with eight pneumatic bellows. The air in these bellows can then be controlled to offer pressure and vibration in complex patterns in space and type.

A Tasbi prototype.

Another haptic feedback prototype, Tasbi (which stands for Tactile and Squeeze Bracelet Interface) uses six vibrotactile actuators, coupled with a wrist squeeze mechanism. This would offer new ways of interacting and nuanced ways to characterize virtual buttons or textures in moving objects. The expectation of both prototypes is develop a system which, using the biological phenomenon of sensory substitution, creates a feel that is indistinguishable from real-life objects and activities.

Facebook Reality Labs, in partnership with the University of California, Santa Barbara, is developing a haptic feedback device in the form of an elastic sleeve, called a PneuSleeve. The sleeve is made from an elastic knit fabric and Velcro, which works to offer a close fit to the skin and the safety of quick release with the Velcro fastening. The fabric design of the sleeve can render a range of stimuli such as compression, skin stretch, or vibration. The haptic device is developed using a series of stretchable tubes and actuators, generating stimuli through controlled pneumatic pressure within the tubes. The research team has suggested that the PneuSleeve allows the device to be included in garments for daily computing interactions, while offering a reasonable amount of design space and leaving the hands free. As well, the use of composite structure of fabrics and elastomers maintains a better fit, which offers more efficient sensing and transmission of stimuli.

Diagram of the PneuSleeve.

The actuator design in the PneuSleeve is based on Fluidic Fabric Muscle Sheets (FFMS) that operate as inverse pneumatic artificial muscles. The setup consists of six single-channel FFMS actuators: two provide radial compression and four generate linear stretch, while all six can generate vibration. The sleeve utilizes a closed-loop controller to regulate grounding compression forces, enabled by a soft force sensor. To achieve this, based on the capacitance sensing theory, the sleeve uses a layered structure of dielectrics, electrodes, and insulators for sensors.